Tagged: 2-pop, Audio sync, delay, delay calculator, gibberish, milliseconds, Video sync

-

Lining up audio from different recorders

Posted by Jesse Lewis on October 30, 2025 at 5:39 amDear MP fam!

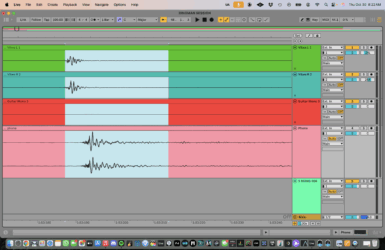

I have a question about lining up transients. (I’ve included a screenshot of my session, where I am “slating” with a handclap.)

This particular session was recorded out in the forest. It’s a duet with electric guitar and vibraphone. I have a pair of stereo mics on the vibraphone, and one microphone right on my guitar amp. So we have some pretty nice close mics for detail. My iPhone was situated about 15 feet in front of us capturing both video and audio. I’d like to add in the audio from the iPhone to add a little stereo capture of the space. My question relates to where exactly I should put the audio from the phone. Because it wasn’t recorded live into my DAW with the other mics I have to drag it in and line it up manually. This is the same process I’ve done for many of my tracks, but I’m curious if any of you could give me some guidance about where you would drag it (miliseconds wise) in relationship to the close mics. In other words, when looking at my handclap “slate” would you try to line up the iPhone transient exactly with the close mic(s) , or would you put it slightly behind because naturally it would take longer for the sound of the handclap to travel to where the phone was set up because of the further distance. Is there some kind of scientific formula for how many milliseconds you should add in track delay per foot of distance (I’m clearly looking at you @-PT ?) I’m guessing the answer is just “nudging” the track around until it feels good!? Curious if there are any “no no’s” to watch out for…

Thanks my friends!

Love,

JLew

Jesse Lewis replied 3 weeks ago 3 Members · 27 Replies -

27 Replies

-

Jesse,

I have a question for you first. Did this clap emanate from the vibe players playing position? Or maybe where you were playing from.PT

-

Good Question, Paul! I’m attaching a picture of the scene for more clarity. This picture is my position right as I was clapping my hands. You can also get a sense of where the iphone was positioned in relation to us by the picture. (I’m also attaching an unmixed snippet of audio from the session just to give you a sense of the vibe…)

-

Jesse,

Although I couldn’t spot it, I’m guessing the phone was somewhere on the “desk” on the stand. I was just checking it was visually in front of the band.

As you compare wave forms of the stereo vibe mics and your stereo phone recording we clearly see that the phone recording, which is further away from the noise source, the vibes, displays the start of the sound recording further to the right on the DAW tracks’ display. We’re basically looking at an X-Y graph. The X axis (horizontal) displays Time, the Y axis (vertical.) We’re looking at your handclap. For a white man in flannel, that is a pretty damn funky lookin ‘clap. It arrives at different times because the physical distance from the source to the microphones are different. The phone mics are further away physically so the sound of the clap (vibrations through the air) arrives later. Later shows up as a smidge to the right. View the screenshot… check? CHECK !

I’m going all science-y on the explanation here so as to provide backstory for those that may not yet understand tech. I’ve had 4 kids from the local college sound recording program shadowing me at the historic State Theatre this month and found a groove when I went really basic and broke things down to easily digestible nuggets.

So back to the original question …

The relative polarity of the phone recording to the vibes mics matters, especially if you slide the (later arriving) phone recording to align with the start time of the first arriving (vibes) signals. Find the first upward peak on the vibes, use that as your target. Find the first peak on the phone recording, if is downward facing..reverse polarity and the slide it over to the left to align with the first arriving positive peak of the vibes mic. Realize this is fucking time traveling magic. With the click and drag of a mouse, we rearrange time. Think about the poor bastards working at Stonehenge this weekend. As we fall behind with a quick digital reset, they’ve got to move all those big boulders one o’clock back.

I would first attempt signal aligning the phone recording with the mics, add a healthy level of it to see if that’s good au natural ambience, ie gentle wind, leaf crunching, geese, etc.

Another experiment would be to use the phone recording as the high passed send to a reverb.

re: milliseconds of delay

When combing two identical signals together but one is later, Inside of 20ish milliseconds they sound as one but with some tonal consequences. 50 milliseconds apart the two sounds will start to separate into two distinct arrival times. 100 milliseconds apart and you have arrived at Graceland, ie the “Elvis slap back)

Choose wisely

-

Paul! Thanks (as always) for your help on this. So many great pieces of info in your response…and you know i gets funky with my clappin’!

I find it interesting that you suggested moving the iPhone audio back to the left on the X axis to align it with the vibes audio. This was kind of the crux of my question — and it seems like both you and @Dana had a similar response in that I should align the phone with the close mic/mics. I would have assumed that I should actually drag it later in time, which would represent the more natural placement of where the microphone actually was in relationship to the source of the sound? It sounds to me like one thing that’s very important, regardless of milliseconds, is that I get the phasing correct! And the rest I should move it around to see what feels/ sounds the best.

I’ve actually been struggling with this mix because the mic on my guitar amp picked up my guitar faster than the bleed of my guitar amp into the mics on the vibes (and the left hand mic and right hand mic also got the guitar at different times from eachother). So I have sound arriving into all the mics at different times. This type of stuff is a real challenge to me because usually I’m just recording one instrument at a time.

I’ll have some sounds to share soon…

Thanks again for all the info and great advice!

JBear

-

Jesse,

You’ve definitely opened a can of worms thinking about managing different arrival times, huh?

Your initial though of pushing earlier arrival times back in time (guitar amp/ vibes mics) to coincide with the latest arriving one (phone mics) is logical. It’s how we manage delay tower speakers at festival. Hold (delay) the signal feeding the delay tower until the main speakers have made that trip through the slow medium of air traveling at the speed of sound. As Dana pointed out, roughly a foot per millisecond. The two sources of sound can combine constructively if time and polarity-aligned. That translates to greater intelligibility, and an improved S/N ratio (signal to noise.) More dry signal level than ambient signal level.

I’m really disappointed that the Stonehenge joke in the first reply didn’t land.

Because dropping and dragging start times in the DAW is so easy you can opt to “pull” the late arrivals back to your self declared start time. That’s what we were suggesting. “Pulling’ the phone mic arrivals back to the vibe overheads. Your close mic’ed guitar would be the first arrival time to show up on the DAW session if you’re both playing the ONE! Just pull all the simultaneously struck “ONES” back to your amp arrival. Voila! Signal alignment may help clean up the sound OR not. If there’s not too much other-than-intended-signal in the microphone, the time misalignment may add spatial character which could be a better choice.

I believe it was Aristotle that said, and I’m paraphrasing here, “The more you know, the more you realize you don’t.” I just added the worms part.

-@PT

-

-

I totally understand what you’re dealing with, homey! If you wanna email me a link to the files, maybe I can reply here with a quick video demo – which might be easier and more useful than describing these complex ideas and techniques with typed words, haaha.

-

-

Dear P $ aka @-PT ,

I’m in the process of mixing another “nature recording” that i did this past summer – this time electric guitar (miced and DI’d), acoustic bass (miced and DI’d), and percussion (miced).

I’m lining up the iphone audio (phone was about 10 ft right in front of us) per your and @Dana ‘s advice and I’m hearing a voice say “try hi-passing the iphone and sending it to a reverb…” Then I remember that you had written this in the above thread — “Another experiment would be to use the phone recording as the high passed send to a reverb.”

I’ve been messing around with this a little and it’s really cool.

I’m wondering if you might elaborate a little on this idea so I make sure I’m getting it right?

Do you essentially mean — get a good mix of all the close miced instruments and then add in the stereo iphone recording, but hi-pass the iphone audio and send it to a reverb? How much would you suggest filtering? How much reverb are you suggesting? Like big reverb? Or just some kind of convolution/space kind of thing? Also — would this be the only iphone track? Or would I duplicate it and keep one in with no eq and then add the second one which is hi-passed and has the verb?

Very interested in your thoughts when you have a moment.

Best,

JLew

-

Jesse,

P-$ here.

“I’m a white man, a white man in black socks.

I wear grey shorts; tank tops and dreadlocks.”

No, I’m sorry. I think you wanted the other one…

Regarding your question. Yes, I was suggesting you use the phone mic as an ingredient in your mix of close miced parts. Something to give ambience and support the viewers’ point of view of being in the woods. Slight breeze, rustling leaves, woodland critters crunching the leaves as they scoot through. Speaking of critters, I would avoid the geese motif you used in the rowboat series, that’s so last summer.

I’ve not tried it but I do believe using a separate copy of the phone mic recording, slightly (100 Hz) high passed as the send to a plate reverb (H3000 Tight and Bright) might could add a sense of hearing reflections off the trees. Some sort of plate verb program meant for percussion that includes a bunch or early reflections and small room ( <1 sec) parameter options.

Creating an inviting and believable audio setting first can help you hook your audience before the musical story unfolds, much like the cinematic effect of looking out over desert in springtime bloom but hearing what appears to be a rattling sound. Adios cowboy.

-

“Geese motifs are SOOO last summer”

Hahaha – I seriously nearly spit out my coffee, @-PT

Great verby tips, too. Sounds like some fun things to try – u rule!

-

Dear P $ – as usual, coming in with the great info. I appreciate it @-PT !

I KNOW i’m being annoying now, but just for my own clarification — are you suggesting i use 2 iphone tracks? One hi-passed and going to the reverb AND a duplicate without the eq and verb?

OR – were you suggesting only using the one eq’d/verbed iphone track?

ps – yes, i agree- screw those jive geese….

JBear

-

Jesse,

Yes, I’m trying to convey the use of two different instances of the phone audio. One for the ambience of being live in the woods listening to the music so it doesn’t come across bone dry. (Who have I become?) If that stereo phone track needs a little EQ to help it talk with the music better, so be it. Anything that helps the illusion is fine.

The second use of the phone tracks would be as the driving source of the highly reflective/small room plate sound that I’m suggesting be the “unnatural” effect-y ambience sound. Perhaps wider to exaggerate the space. Tonal seasoning to taste but I’m guessing a low passed version here will do two things. One, let the low end of the close miced instruments retain their low end very distinct and intact, and two, allow you to subtly and magically change the listeners perception of the environment when and if the music hits its stride, becomes greater than the sum of its parts, and transports the listener.

-

Thank you for this! I can dig it! I’ll get to work on this and hope to have some audio to share soon. Many thanks,

JLew

-

-

-

-

-

-

Great question, Jesse – and great response, @-PT!

Is the iphone the only camera position, or is this a multi-cam setup?

For me, I’d keep this pretty simple:

- Ensure the clap audio in your close mics lines up with the clap motion in the video. NOTE: mute or disregard the iphone audio at this point! Move the iphone video (with its muted audio) around, frame by frame, until it syncs up nicely with your close-mic audio tracks.

- Now, unlink the iphone audio from the iphone video so you can move the iphone audio independently from its video track.

- Next, move the unlinked iphone audio so that it’s “clap” syncs up with your close mic claps. NOTE: sometimes I’ll change my vid editor timeline to Samples at this point if I’m unable to achieve good sync/phase using the Frames timeline.

- Unmute the stereo iphone audio track and balance against your direct mics to taste!

Bonus Round: Add a millisecond-based delay plugin to your iphone audio set to 100% Wet, and try sweeping around the delay time (say, btwn 0 and 100ms) to simulate control over how far away the iphone microphone is. By adding 15 milliseconds, the iphone mic will sound as if it’s roughly 15 feet away from your direct mics! (Yay, the science part!). NOTE: honestly, for mixing purposes, at this point I would just ditch the science and use my ears while sweeping around the ms delay time on the plugin until I settle on something that sounds cool… who knows– it might sound amazing set to 1137ms!)

Can’t wait to hear what you end up with!

🤓

PS – here’s some speed of sound info from the ol’ Google machine:

It would take approximately 13.3 milliseconds for sound to travel 15 feet in dry air at room temperature.

The calculation is based on the following:- Speed of sound: Approximately 1,125 feet per second (fps) at 20 °C (68 °F).

- Formula: Time = Distance / Speed

- Calculation in seconds: 15 feet / 1,125 fps = 0.01333 seconds.

- Convert to milliseconds (1 second = 1,000 milliseconds): 0.01333 seconds * 1,000 ms/second ≈ 13.3 ms.

A common approximation used in live sound engineering is that sound travels about one foot per millisecond (1 ms/ft). Using this simple rule of thumb, the time would be approximately 15 milliseconds. The more precise calculation above gives a more accurate value.

-

Thanks so much Dana! This is very helpful!

Love,

Abuela

-

Whelp .. my “quick demo” turned into a 90-minute start-to-finish alignment and mixing workshop 😂. I hope you and others here find this helpful and interesting!

This is an unedited, single-take vid, and there are a couple minor audio dropouts but they pass quickly.

Ooh! And … you’ll get an early glimpse at my ongoing studio makeover!

So, grab a bag of popcorn, hit full-screen mode on the video, beam it up to your living room TV and NERD OUT!

BTW, @JLew – this music and the fall foliage setting are absolutely gorge!

-

Dear @Dana !

Brother! This is so unbelievably next LEVEL!!! Thank you for taking the time to go through all of this and record it for the benefit of all of us here at mix protege! I learned SOOOO much. This lesson is applicable to so much of the music I’m recording, it’s going to help make my productions sound much better and more representative of what it feels/sounds like when I’m actually playing the music.

We are truly blessed to have you in our lives. Thank you for creating this space and for all that you’re giving to this community and the greater world of music!

Thanks also to @-PT for always offering your knowledge and going so deep with your expertise as well!

Mucho Love,

JLew

-

Awe, that means so much @JLew – thank YOU for sharing your files and your beautiful music with us all!!

Can’t wait to hear and see your finished video release!! 😍🍁

-

-

-

Thanks, brother @-PT! And thank you again for all your incredible wisdom here in this thread and throughout Mix Protégé!! ⚡️💡🤍

-

Dear MP Fam!

Just throwing up the final video and audio from this thread now that they are “out in the world.” Thank you so much to all that contributed your time and expertise to helping me improve my mixing. @Dana — thanks so much for going so over the top on this thread (and on this platform) and making that video demonstration. I’ve gone back to it several times since to rewatch parts of it, and it has been so helpful. Thanks @-PT for your valuable insights on this thread as well.

-

-

-

Wooo! Yeah, @JLew! I love this, man – congrats on the release!! Gonna add the Spotify versions to the Mix Protégé playlist!

Thank you so much for all your incredible musical contributions here, your thoughtful questions and sonic curiosities, and your thoroughly pozzy* MP vibes! You rule!

_________________

*I had a great session in Kernersville, NC years ago. The assistant engineer there was so fun and funny. I commented on one of the studio’s many interesting ceramic figurines and incense sculptures. I was like, “that one there is a lil spooky”, to which she replied, with zero irony, “yeah for sure – that one definitely gives off some neggy vibes.” 😂. I’ve been using that ever since lolol.

-

-

-

-

Log in to reply.